You are currently browsing the tag archive for the ‘The Singularity is Near’ tag.

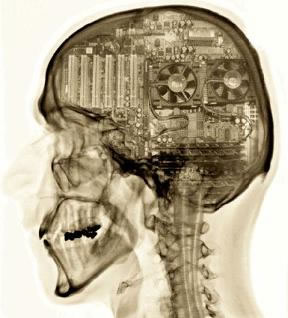

People who believe that the mind can be replicated on a computer tend to explain the mind in terms of a computer. When theorizing about the mind, especially to outsiders but also to one another, defenders of artificial intelligence (AI) often rely on computational concepts. They regularly describe the mind and brain as the “software and hardware” of thinking, the mind as a “pattern” and the brain as a “substrate,” senses as “inputs” and behaviors as “outputs,” neurons as “processing units” and synapses as “circuitry,” to give just a few common examples…

Intriguingly, some involved in the AI project have begun to theorize about replicating the mind not on digital computers but on some yet-to-be-invented machines. As Ray Kurzweil wrote in The Singularity is Near:

Computers do not have to use only zero and one…. The nature of computing is not limited to manipulating logical symbols. Something is going on in the human brain, and there is nothing that prevents these biological processes from being reverse engineered and replicated in nonbiological entities.

In principle, Kurzweil is correct: we have as yet no positive proof that his vision is impossible. But it must be acknowledged that the project he describes is entirely different from the original task of strong AI to replicate the mind on a digital computer. When the task shifts from dealing with the stuff of minds and computers to the stuff of brains and matter—and when the instruments used to achieve AI are thus altogether different from those of the digital computer—then all of the work undertaken thus far to make a computer into a mind will have had no relevance to the task of AI other than to disprove its own methods. The fact that the mind is a machine just as much as anything else in the universe is a machine tells us nothing interesting about the mind. If the strong AI project is to be redefined as the task of duplicating the mind at a very low level, it may indeed prove possible—but the result will be something far short of the original goal of AI.

If we achieve artificial intelligence without really understanding anything about intelligence itself—without separating it into layers, decomposing it into modules and subsystems—then we will have no idea how to control it. We will not be able to shape it, improve upon it, or apply it to anything useful. Without having understood and replicated specific mental properties on their own terms, we will not be able to abstract them away—or, as the transhumanists hope, to digitize abilities and experiences, and thus upload and download them in order to transfer our consciousnesses into virtual worlds and enter into the minds of others. If the future of artificial intelligence is based on the notion that the mind is really not a computer system, then this must be acknowledged as a radical rejection of the project thus far. It is a future in which the goal of creating intelligence artificially may succeed, but the grandest aspirations of the AI project will fade into obscurity.

(Excerpt)

Ari N. Schulman

The New Atlantis